AI Feedback Collection: Case Studies in Education

AI-powered feedback systems are changing how schools gather and use feedback, offering faster, more detailed insights than older methods like surveys. These tools help educators improve teaching in real-time, making adjustments during a course rather than waiting until it’s over. For example:

- Stanford University (2021): AI analyzed teaching practices, boosting instructor responsiveness by 10% and reducing talk time by 5%.

- UC Santa Cruz (2025): AI chatbots collected 23 responses in 5 minutes, identifying issues like mismatched readings and lectures.

- University of Newcastle (2020-2025): AI processed open-ended feedback and doubled survey response rates.

- Georgia Tech (2023): An AI teaching assistant improved student outcomes, with more students earning "A" grades (66% vs. 62%).

These systems save time, handle large-scale data, and provide actionable feedback. However, success depends on ethical guidelines, human oversight, and using AI as a tool for growth rather than surveillance. Schools can use AI to improve teaching while keeping educators at the center of decision-making.

Breaking the Assessment Bottleneck Finding Human AI Balance in Feedback at Scale

Case Studies: Educational Institutions Using AI for Feedback

Building on earlier discussions about the advantages of AI in feedback, these real-world examples highlight how universities have embraced AI to reshape their feedback processes. Each institution tackled distinct challenges, ranging from managing large volumes of qualitative data to making swift course adjustments. Through AI, they discovered tailored solutions that brought measurable improvements.

Case Study 1: Explorance MLY for Qualitative Feedback

From 2020 to 2025, the University of Newcastle in Australia overhauled its feedback system by replacing 30 quantitative survey questions with a single open-ended prompt. Under the leadership of Meagan Morrissey, the university adopted Explorance MLY to process and analyze vast amounts of qualitative feedback. This shift doubled survey response rates, with AI-powered models - specifically trained in higher education language - identifying key themes and providing actionable suggestions, such as increasing online tutorial offerings.

"We were reading comments most of the year, and now MLY is processing that workload in minutes. The pre-trained models were a key difference for us because other off-the-shelf text analytics tools weren't trained using Higher Education comments. With MLY, we're speaking the same language." – Meagan Morrissey, Manager, Student and Staff Insights, University of Newcastle

Abu Dhabi University faced a similar challenge with multilingual feedback and also turned to Explorance MLY. The system analyzed comments in both Arabic and English, flagged concerning feedback, and automatically redacted sensitive information. This allowed staff to focus on strategic improvements instead of time-consuming manual data handling. Explorance MLY’s impact was further recognized when it earned accolades in the "AI in Education" category at the 2024 QS Reimagine Education Awards and was named a Top 20 Assessment and Evaluation Company in 2025.

Case Study 5: Jill Watson AI Teaching Assistant at Georgia Tech

Georgia Tech showcased another creative use of AI in education with the introduction of the Jill Watson AI teaching assistant in Fall 2023. Designed for an Online Master of Science in Computer Science course with over 600 students, Jill Watson was developed by Professor Ashok Goel and the DILab team. This AI assistant incorporated ChatGPT technology but limited its responses to verified course materials using textual entailment.

Jill Watson delivered impressive results. It achieved accuracy rates between 75% and 97% on synthetic test sets and successfully answered student questions 78.7% of the time in real classroom scenarios. The failure rate was notably low at 2.7%, compared to 14.4% in similar systems. Additionally, the AI assistant had a positive impact on academic outcomes - students using Jill Watson earned more "A" grades (66% versus 62%) and fewer "C" grades (3% versus 7%).

What These Case Studies Reveal About AI Feedback Systems

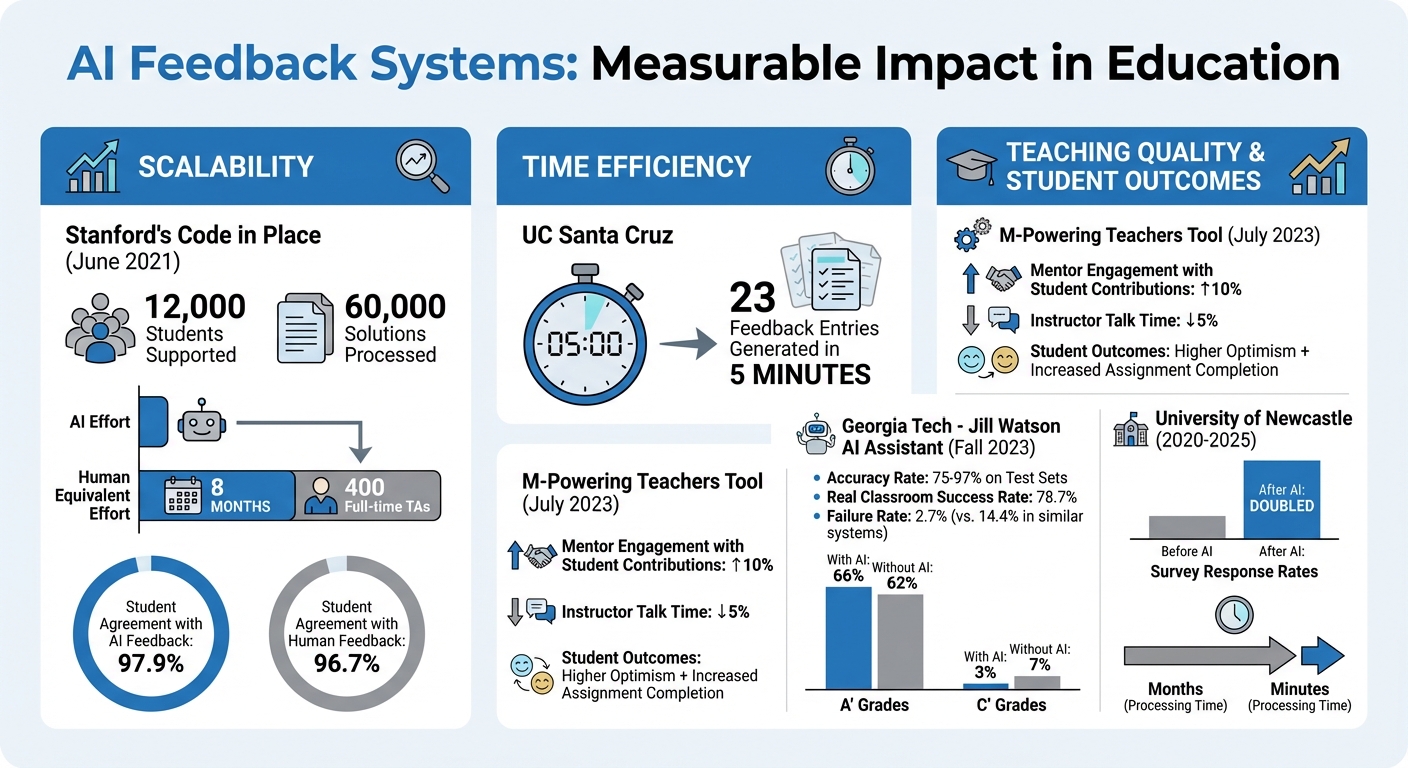

AI Feedback Systems in Education: Key Statistics and Outcomes from University Case Studies

The case studies above highlight three major advantages of AI feedback systems in education.

Scalability emerges as a standout feature. For example, Stanford's Code in Place program in June 2021 handled feedback for a staggering 12,000 students and 60,000 solutions. To put this into perspective, achieving the same results would have required 8 months of human effort or 400 teaching assistants working full-time. Impressively, students agreed with the AI-generated feedback 97.9% of the time, slightly surpassing the 96.7% agreement rate for feedback from human instructors. This demonstrates how AI can efficiently manage large-scale educational tasks while maintaining quality.

Another key benefit is time efficiency. At UC Santa Cruz, an instructor was able to generate 23 feedback entries in just 5 minutes, a rate far beyond what traditional methods like surveys typically achieve.

The third benefit is improvements in teaching quality and student outcomes. A study conducted in July 2023 by researchers Dorottya Demszky and Jing Liu tested the M-Powering Teachers tool with 414 mentors at Polygence. The results were compelling: AI feedback increased mentors' engagement with student contributions by 10% and reduced instructor talk time by 5%. Students also reported feeling more optimistic about their academic futures and showed higher assignment completion rates.

These examples underscore how AI feedback systems tackle a critical issue in education - providing personalized, actionable feedback on a large scale without overburdening human resources. Traditional methods, like surveys, often arrive too late to make meaningful changes during a course, and human observation can be both costly and hard to scale. AI fills this gap by offering consistent, timely insights that instructors can use right away. These findings highlight AI's growing role in reshaping feedback processes in education.

sbb-itb-abfc69c

How Educational Institutions Can Apply These Lessons

For schools aiming to integrate AI feedback systems, the first step is to establish clear ethical guidelines before launching any pilot programs. Take EdNovate Charter Schools as an example - they developed a framework that tackled critical issues like safety, privacy, equity, and accountability. This kind of proactive planning helps schools address concerns about data privacy and algorithmic bias early on, laying a strong foundation for adopting AI in feedback systems.

It’s also important to position AI as a tool for professional growth rather than as an evaluation mechanism. Chris Piech from Stanford highlights the value of this approach:

"It's much more comfortable to engage with feedback that's not coming from your principal, and you can get it not just after years of practice but from your first day on the job".

By Fall 2024, 43% of teachers had already participated in at least one AI training session - a notable 50% increase from the previous spring. Framing AI as a self-reflection tool, rather than as a form of administrative oversight, has clearly encouraged broader adoption.

Once ethical guidelines are in place, schools need to focus on building a robust technical infrastructure to scale AI systems effectively across diverse environments. High-quality audio capture and reliable performance matrices are essential. Without these, poor transcription or mismatched performance metrics could derail the feedback process. For example, schools can use performance matrices to categorize students into four quadrants based on their time investment and performance levels. This allows teachers to identify patterns and provide targeted support. For instance, students who put in significant effort but show low outcomes may benefit from specific interventions.

To ensure fairness, schools should implement multi-agent systems with "Equity Monitors" designed to detect biased language and maintain consistent feedback across all student demographics. Research at UC Santa Cruz supports this approach, showing that informal and impartial chatbot personas encourage more candid student responses. As one student participant explained:

"If the character can be a bit more informal, then people would reply more, respond more freely, more candidly. It needs to be impartial - not like a TA or professor - so students can feel freer".

Finally, maintaining human oversight is key to leveraging AI effectively. AI can handle tasks like initial data processing and pattern identification, but teachers should focus on applying those insights and driving meaningful interventions. Encouraging students to critique AI-generated feedback can also help build critical AI literacy. This "human-in-the-loop" approach ensures that AI supports, rather than replaces, human judgment, creating a balanced and effective system.

Conclusion

The examples shared throughout this article highlight how AI-powered feedback systems are transforming how feedback is gathered and analyzed in education. Take Stanford's M-Powering Teachers tool, for instance - it boosted mentors' engagement with student input by 10%. Similarly, at UC Santa Cruz, an AI chatbot collected 23 responses in just five minutes during a pilot program. These results showcase how AI is reshaping feedback processes, paving the way for broader use in classrooms and beyond.

One of the key advantages of this approach is the shift from traditional end-of-term surveys to real-time, mid-course feedback analysis. With early insights, instructors can make adjustments that benefit their current students, rather than waiting for changes to impact future classes. These case studies emphasize how AI feedback systems enable educators to respond quickly and effectively.

However, the success of these tools depends on human involvement. Rather than being a form of surveillance, AI serves as a resource for professional growth. As Chris Piech, Assistant Professor of Computer Science Education at Stanford University, explains:

"It's much more comfortable to engage with feedback that's not coming from your principal, and you can get it not just after years of practice but from your first day on the job."

This "human-in-the-loop" approach ensures that AI complements, rather than replaces, human decision-making. Teachers remain at the center, using AI insights to drive meaningful changes in their classrooms. Altogether, these examples offer a clear path for educational institutions to adopt AI feedback systems that improve teaching quality and student outcomes - all while striking a balance between automation and the nuanced understanding that only humans can provide.

FAQs

How can AI feedback tools help teachers make real-time improvements in the classroom?

AI feedback tools give educators the ability to make quick, informed decisions by offering real-time insights into what's happening in their classrooms. For instance, automated systems can evaluate teacher-student interactions, pinpointing moments where teachers successfully build on student input. This kind of analysis helps teachers adjust and refine their techniques for future lessons.

One study found that teachers who received AI-generated feedback via email boosted their use of effective questioning strategies by 20%. Others used AI-powered conversational surveys to collect student reflections, allowing them to tweak lessons mid-course. These tools make it easier to adjust pacing, clarify explanations, or modify activities right when it matters most. By turning delayed evaluations into immediate, actionable feedback, AI systems help educators fine-tune their teaching methods in real time, improving both student engagement and satisfaction.

What ethical issues should schools consider when using AI for feedback collection?

Schools integrating AI-driven feedback tools need to put student privacy front and center. This means being clear about how data is collected, stored, and used. From the beginning, schools should implement strong policies around consent, data minimization, and secure handling to safeguard sensitive information.

Addressing bias is equally important. AI systems can sometimes unintentionally favor certain demographic groups, which risks deepening existing inequalities. For instance, resource gaps have been shown to exacerbate achievement disparities, especially for underserved communities. Conducting regular fairness audits and adopting bias-reduction strategies are essential steps to ensure equity.

Teachers also play a key role in this process. They need proper training to understand and interpret AI-generated recommendations and step in when necessary. Relying too heavily on AI without human oversight can lead to mistakes. To further build trust, schools should give students the option to opt out of AI-based systems, ensuring transparency and accountability in the feedback process.

How does AI promote fairness and inclusivity in student feedback?

AI-driven feedback systems aim to promote fairness by incorporating safeguards directly into the evaluation process. For instance, some systems utilize tools like an Equity Monitor, which flags potentially biased or exclusionary language in student submissions before assigning scores. Once scoring is complete, these systems conduct fairness checks by analyzing error rates across various demographic groups, allowing educators to address any disparities as they arise.

These systems also enhance inclusivity by delivering clear, bias-conscious feedback and encouraging students to engage in self-reflection through structured prompts. By actively monitoring and addressing potential biases, AI helps large-scale courses deliver consistent, high-quality feedback to students from all backgrounds.